If you have a recent iPhone and open Photos.app, you’ll probably notice that some images seem to ‘pop’. Look closer, and you may realize that the rest of the UI now looks grey:

This is extended dynamic range; Apple’s method of seamlessly displaying HDR content alongside SDR content.

In building my camera app Composure Camera, I wanted to do the same thing, and ended up doing a deep dive into the world of EDR.

In part 1, I cover how to display and render HDR photos in SwiftUI and UIKit while in part 2, I cover EDR rendering with Metal and go deep into color spaces and transfer functions.

Gain Map HDR

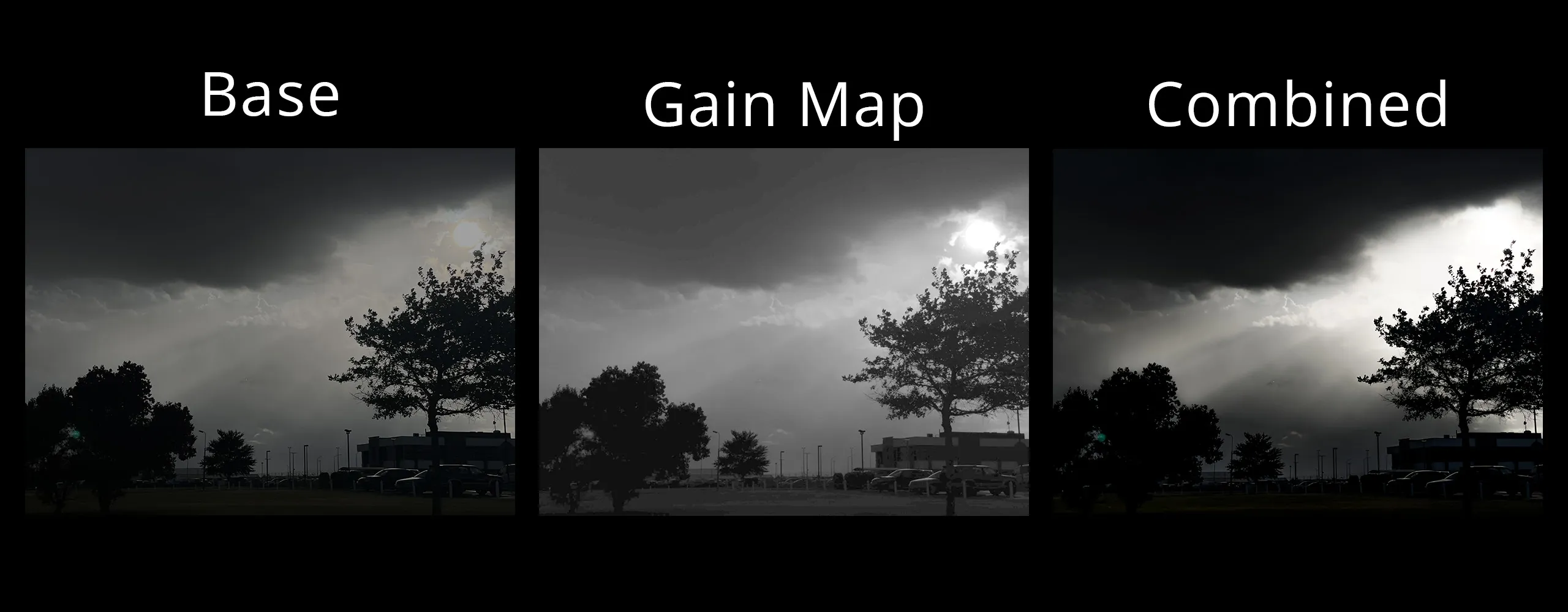

Gain map HDR is a backwards-compatible way of supporting HDR in photos by embedding a separate gain map image alongside the primary SDR image. iPhones have supported this since 2020, and this is the most common form of HDR photo you will see today.

The gain map is a lower-resolution, single-channel bitmap which is used to adjust the brightness of an image. Software that does not support this will simply ignore it, ensuring that the photo can be rendered in SDR without any issues.

This technique effectively increases the bitdepth of the image, reducing the banding that would otherwise make displaying 8-bit images — the maximum bitdepth supported by JPEG — in HDR unusable.

SDR visualization of how the gain map is combined with to create the HDR image.

Since embedded previews for DNG (RAW) files only supports JPEG and the other approach, ISO HDR, cannot be supported by JPEG files, any RAW or ProRAW files you take will use this approach for the foreseeable future.

Additionally, even normal HEIC files captured by Apple devices use this type of HDR image. Thus, if you want to support HDR for photos taken on iPhones, you will have to implement support for this.

Starting in iOS 17, Apple has finally provided an easy API to render these images.

As described at the very end of this WWDC session, we can now use:

guard let ciImage = CIImage(

data: data,

options: [.expandToHDR: true]

) else { return }

// And now render to a bitmap

guard let cgImage = ciContext.createCGImage(

ciImage,

from: ciImage.extent,

// Half the size of .RGBA16. Don't use 8-bit formats

format: .RGB10,

// Can use any extended, extendedLinear, PQ, or HLG format

colorSpace: .init(name: CGColorSpace.itur_2100_HLG)

) else { return }in order to render image data to an EDR texture. I go into more detail on color spaces in part 2.

This process is more expensive than the standard ciContext.createCGImage(_:from)

call, even if the image does not contain a gain map. On a 12MP ProRAW JPEG preview

(which contains a gain map), it took ~30 ms compared to 3 ms to render on an

iPhone Xs.

To detect if the image contains an HDR gain map, we can check the image’s EXIF metadata to determine if it has a headroom value. This metadata is embedded in Apple’s undocumented EXIF manufacturer tags, so it seems unlikely that this format will see use in non-Apple devices.

Note that reading the EXIF metadata with CGImageSourceCreateWithData and

CGImageSourceCopyPropertiesAtIndex also takes a few milliseconds.

Once rendered, simply wrap the CGImage in an UIImage before displaying it

in SwiftUI with the new iOS 17 View modifier:

Image(uiImage: uiImage)

.allowedDynamicRange(.high)UIKit also has an equivalent property which can be set to enable EDR:

UIImageView.preferredImageDynamicRange.

Apple is standardizing this under ISO/NP 21496-1. However, Adobe also has its own separate, incompatible gain map specification 1 which is used in Lightroom for HDR exports. From their support page, it seems that only Chrome desktop can view these images — as of iOS 17, neither Photos.app not Core Image supports these. However, as the specification is open and well-documented, it seems feasible for an individual to implement this.

ISO HDR

ISO HDR is a newer method for HDR images being devised by Apple. Currently being standardized as ISO/TS 22028-5:2023, this gets rid of the separate gain map by natively encoding the image in HDR.

It requires the use of an HDR transfer function: hybrid log-gamma or perceptual quantization and a wide colorspace: the BT.2100 (or equivalently, BT.2020) color primaries. There are also a bunch of metadata requirements which I don’t understand.

It also requires a minimum bitdepth of 10 bits, ruling out JPEGs support. Additionally, Apple devices currently capture images in Display P3, not BT.2100. As such, until Apple switches to using this color space for the camera, this method will likely not see widespread use.

HDR videos captured on iPhone (as well as any professional HDR content) already uses BT.2100, so it is not a stretch that Apple will switch photos to using this color space in the future.

Apple’s HDR sample app saves images in the BT.2100 color space, but I haven’t otherwise encountered any images which use this color space.

The downside of this approach is the loss of backwards compatibility: if you have tried to play HDR video on non-Apple devices or older software, it is likely that you have seen these videos play looking washed out or plain wrong: the software must support the color space and transfer function to display the content correctly. This can be mitigated: when Apple switched from capturing JPEG to HEIF files, iOS and Photos.app gained the ability to convert HEIF images to JPEG when sharing to unsupported destinations.

Despite the downsides, supporting ISO HDR images in iOS 17 is easier than

supporting tone-mapped HDR: just use the aforementioned .allowedDynamicRange

modifier with your existing image constructors (e.g. UIImage(data:), Image(name:)).

RAW

RAW images (both Bayer RAW and ProRAW) can be rendered using

CIRAWFilter,

which was introduced back in 2021.

Rendering these images in EDR requires only a few minor modifications:

guard let rawFilter = CIRAWFilter(imageData: data, identifierHint: uniformTypeIdentifier) else {

return nil

}

// https://developer.apple.com/videos/play/wwdc2021/10160/?time=1108

// Optional

rawFilter.baselineExposure = 0.0

rawFilter.shadowBias = 0.0

rawFilter.boostAmount = 0.0

rawFilter.localToneMapAmount = 0.0

// Must be true for EDR

rawFilter.isGamutMappingEnabled = true

// 0 = no EDR, 1 = default EDR, 2 = max EDR

rawFilter.extendedDynamicRangeAmount = 1.0

let colorSpace = CGColorSpace(CGColorSpace.displayP3_PQ)!

let context = CIContext(options: [.outputColorSpace: colorSpace])

guard let outputImage = rawFilter.outputImage,

let cgImage = context.createCGImage(

outputImage,

from: outputImage.extent,

format: RGB10,

colorSpace: colorSpace

) else { return }isGamutMappingEnabled must be true - when false, the image gets rendered in

linear space and is limited to SDR.

Before realizing this, I spent a long time attempting to render to a Metal texture,

and created a Metal EDR pipeline to do so - something I cover in part 2.

This ended up not working due to performance issues - for whatever reason,

CIContext.render(_:to:commandBuffer:bounds:colorSpace:) would sometimes take

upwards of 30 second to render a 48MP ProRAW MAX image.

I picked the displayP3_PQ color space in this example and although it seems to work,

I’m not exactly sure why: in the documentation for

allowedDynamicRange(_:),

it implies that the image must have an HDR color space and that BT.2100 is

the only one.

Given that Apple’s WDDC session and a 2021 presentation on ISO HDR state that BT.2100 is a requirement, it seems that although iOS renders ISO HDR images with EDR, it is not a requirement for EDR rendering of images.

Conclusion

Although iOS 17 finally introduces native support for displaying HDR images, we are still in the early days of this technology, with three separate ways of supporting HDR. However, given that trillions of iPhone photos have been captured with Apple’s HDR gain map technique, this will be the primary type of HDR you should support today.

Adobe’s version, which has an open specification and lack of proprietary EXIF tags, will likely see more widespread adoption in the future. As for ISO HDR, supporting it is ‘free’ in iOS 17 with SwiftUI/UIKit, but it will likely take many years before we see any significant adoption.

Next up in part 2, I cover my misadventures in EDR rendering in Metal.